Many nutrition apps integrate with fitness wearables, or they provide you with the option of telling the app how many calories you burned each day via exercise, for the purpose of adjusting your daily calorie intake target. Many people who are interested in using MacroFactor ask if the app has this functionality, and some new users are surprised to learn that it doesn’t. I can certainly understand why people may expect MacroFactor to have this feature, and why they’d view its omission as an oversight. However, this article will explain why we intentionally decided to not use energy expenditure data from fitness wearables or manually-entered estimates of exercise energy expenditure to inform MacroFactor’s recommendations. Furthermore, this article will explore why omitting this feature is actually a strength of MacroFactor’s approach.

Finally, even if you’re not interested in using MacroFactor, I think you’ll still glean plenty of interesting and useful information from this article about the accuracy (or lack thereof) of wearable devices for estimating energy expenditure, and the complications that arise when trying to predict how exercise will impact your total daily energy expenditure.

To start with, let’s first explore how fitness wearables estimate energy expenditure.

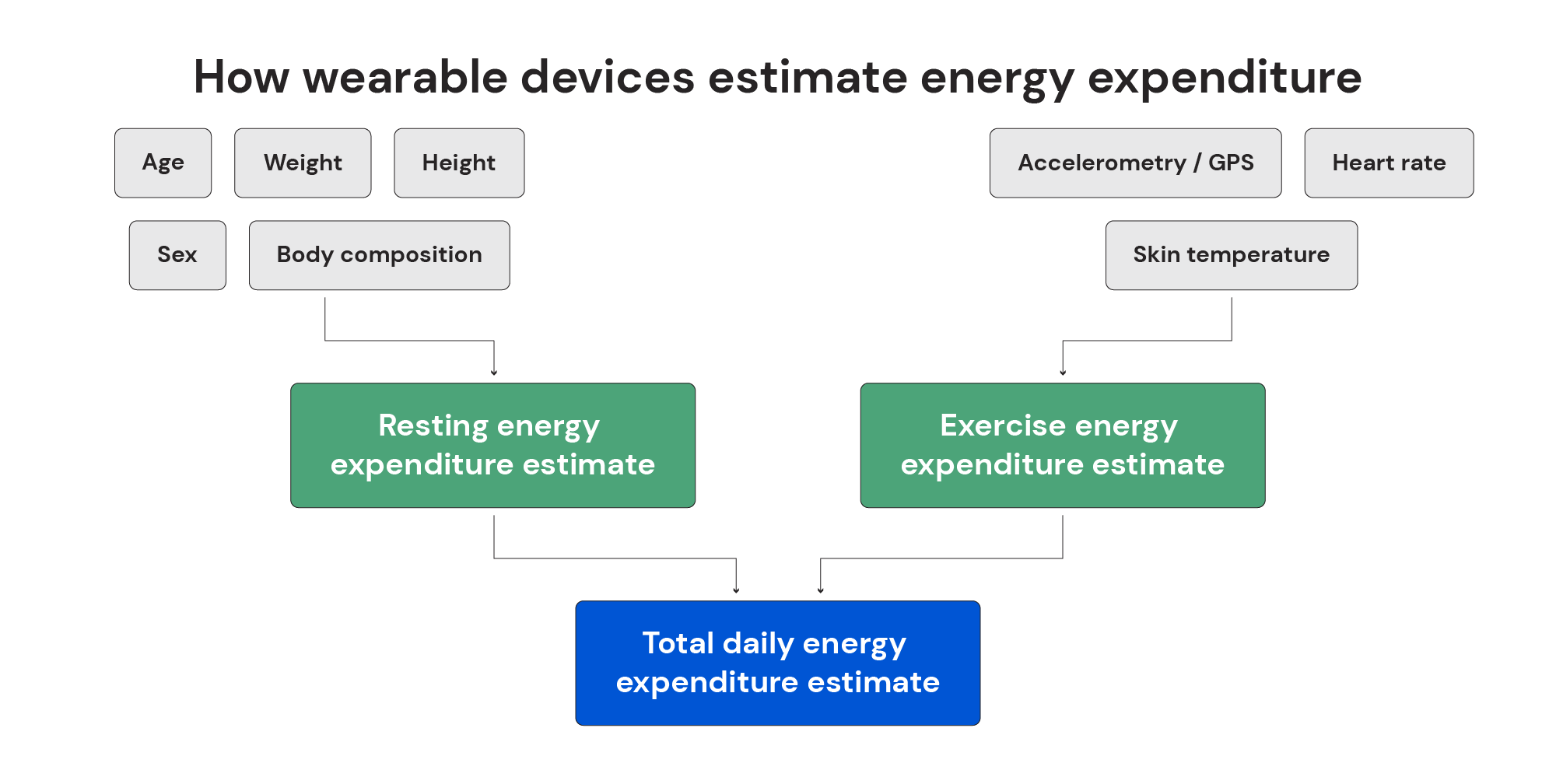

Each company that produces wearable devices has its own proprietary algorithm, but the basic process they use for estimating energy expenditure is pretty straightforward. First, they estimate how many calories you burn at rest based on basic anthropometric and demographic characteristics: age, height, weight, sex, and perhaps an estimate of body composition. Then, they estimate how many additional calories you burn during day-to-day activities (walking around, doing chores, etc.) and exercise using: a) technologies that estimate the amount you’re moving (accelerometry and/or GPS), b) heart rate data, or c) both heart rate data and estimates of movement. Some wearable devices also estimate skin temperature to further inform their energy expenditure estimates.

Overall, that’s a perfectly logical process to estimate energy expenditure, since all of those variables do correlate with energy expenditure: older people tend to burn fewer calories than younger people, taller and heavier people tend to burn more calories than shorter and lighter people, males tend to burn more calories than females, people who move more tend to burn more calories than people who move less, people who experience larger or longer elevations in heart rate when exercising tend to burn more calories than people who experience smaller or shorter elevations in heart rate when exercising, and an elevation in skin temperature is generally a good indicator that you’re expending more energy. However, estimates always come with some degree of estimation error, which invites us to ask: how accurately do wearables estimate energy expenditure?

Unfortunately, wearable devices do a notoriously poor job of estimating energy expenditure. The inaccuracy of wearable devices for estimating energy expenditure is thoroughly documented in the research literature, but many consumers aren’t aware of this track record.

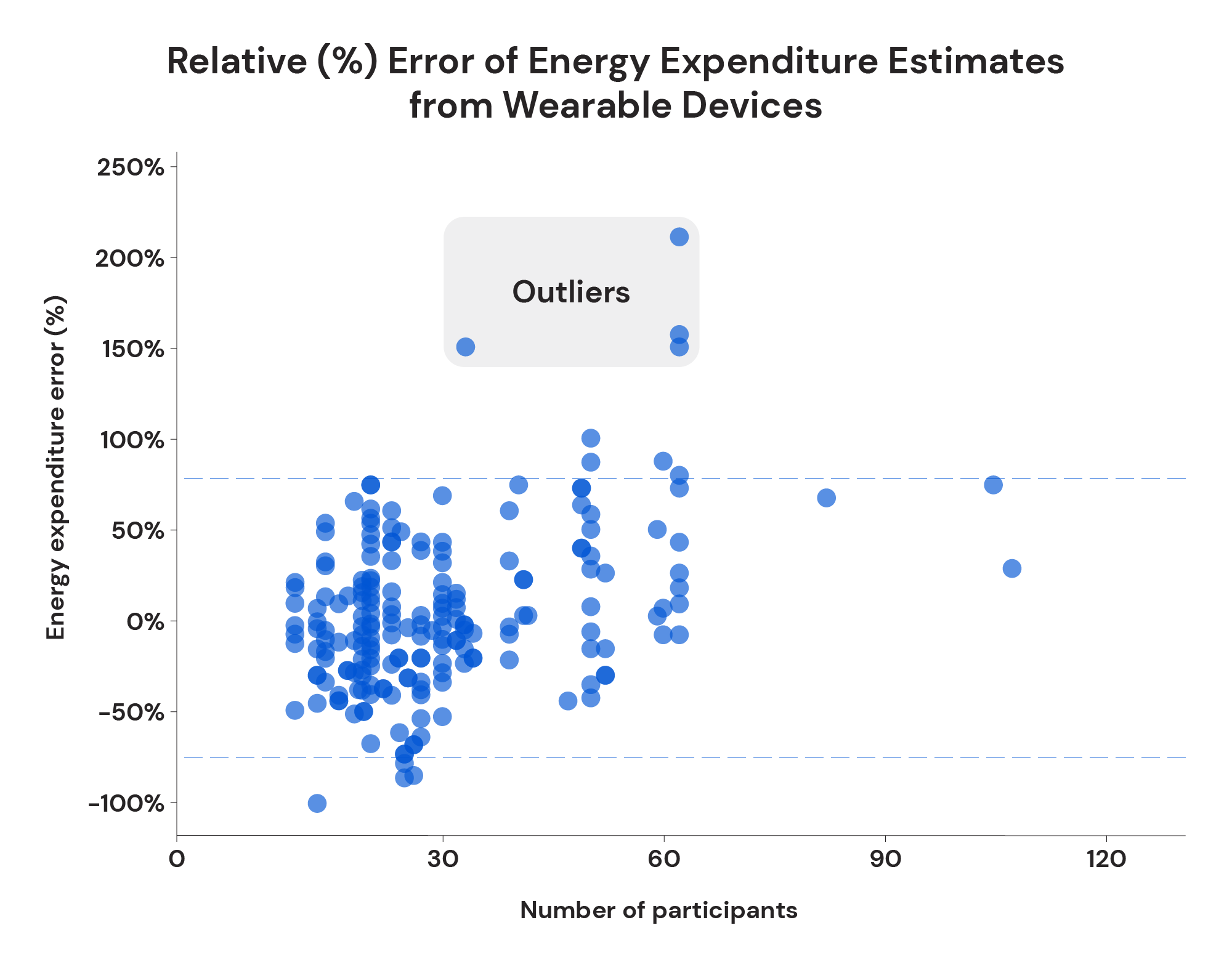

For example, a 2020 systematic review found that group-level estimates of energy expenditure may be off by more than 50% in controlled settings. And, of course, individual-level errors span an even wider range than group-level errors. If a study finds that a particular device overestimates energy expenditure by an average of 15% for a group of people, that means some people will have errors closer to 0%, but other people will have errors closer to 30%. Furthermore, the same systematic review found that, in free-living settings, wearable devices result in average under- or over-estimates of energy expenditure by more than 10% a whopping 82% of the time.

To be fair, some of the devices covered in that 2020 systematic review have been discontinued, so it would be fair to ask if newer devices are more accurate. To that end, a 2022 study on a more recent crop of wearables investigated their accuracy during sitting, walking, running, cycling, and resistance training. It found that “for energy expenditure, all 3 devices displayed poor accuracy for all 5 physical activities (CVs between 14.68-24.85% for Apple Watch 6, 16.54-25.78% for Polar Vantage V and 13.44-29.66% for Fitbit Sense).” Furthermore, the mean absolute percentage errors ranged from 14.9%-47.8%.

Let’s drill down into two discrete examples:

The best performance of any device during any exercise was observed when the Apple Watch 6 was used to estimate energy expenditure during running. Its mean absolute percentage error was 14.9 ± 9.8%. In other words, about two-thirds of individuals can expect errors ranging from 5.1-24.7%, and errors up to 34.5% are perfectly normal – within 2 standard deviations from the mean (assuming that errors are normally distributed). In other words, if you think you burned a total of 1000 calories jogging last week, it’s entirely possible that you actually burned 1345 calories, but it’s also possible that you only burned 655 calories. Keep in mind, that’s the best-case scenario observed in this study.

For a more middle-of-the-road example, the Polar Vantage V estimated energy expenditure during resistance exercise with a mean absolute percentage error of 34.6 ± 32.6%. In other words, about two-thirds of individuals can expect errors ranging from 2.0-67.2%, and errors approaching 100% (99.8%, to be exact) are perfectly normal – within 2 standard deviations from the mean (again, assuming a normal distribution of errors). I wouldn’t be shocked if simply guessing your energy expenditure provided you with a more accurate estimate.

Now, I don’t want to make it sound like fitness wearables have no utility whatsoever. The same 2020 systematic review that found that wearables do a poor job of estimating energy expenditure also found that wearables actually do a pretty good job of measuring heart rate and estimating step counts. There’s also evidence that using devices to track step counts can be used to improve weight loss success. However, it should be clear that wearable devices have a long way to go before they can be used to estimate energy expenditure accurately and reliably for most people.

With that in mind, the primary reason why we don’t use energy expenditure estimates from wearable devices to inform MacroFactor’s recommendations should be obvious: they don’t provide us with accurate data. Incorporating energy expenditure data from wearable devices would necessarily entail incorporating their estimation error, resulting in worse calorie recommendations overall.

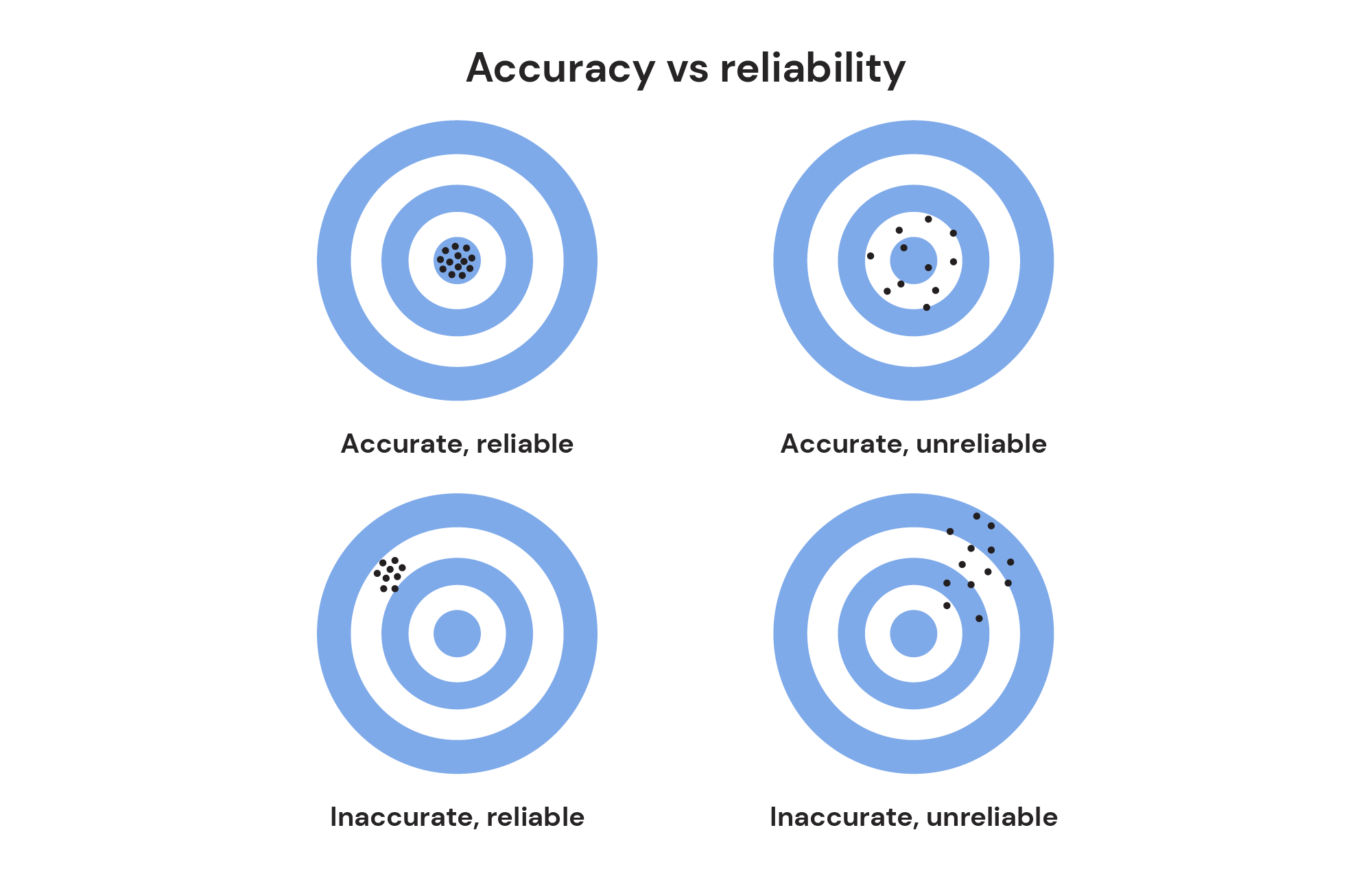

Scientifically minded readers may raise an understandable objection to the prior paragraph: “maybe wearable devices don’t accurately estimate energy expenditure, but they’re reliable enough to provide useful data, right?”

That’s a perfectly reasonable question to ask. In many cases, inaccurate, reliable data is almost as useful as accurate, reliable data. Reliability tells you how repeatable a measurement is: if you measure the same thing multiple times, do you get the same result each time?

For example, maybe you weigh exactly 200 pounds, but your scale says you weigh 185 pounds. In that case, your scale would be very inaccurate. However, if you step on the scale five times, and it records your weight as 185 pounds each time, it would be a reliable scale. Furthermore, if you gain two pounds, and your scale tells you that your weight increased by two pounds, that means your scale can be used to measure changes in weight with both accuracy and reliability. So, your scale would have poor accuracy, but it would still give you plenty of useful information about weight changes over time.

Applying this concept to wearable devices, their energy expenditure data would still be quite useful if they were known to have high reliability. If a Garmin watch underestimates your energy expenditure by 600 calories per day, it wouldn’t be ideal for directly determining your calorie targets; however, if the error was consistent in both direction and magnitude, it would be very useful for knowing the degree to which your energy expenditure changed over time.

So, is that the case? Is the poor accuracy of wearable devices saved by high reliability?

Surprisingly…we don’t know.

In their 2020 systematic review, Fuller and colleagues were unable to find any studies reporting the intradevice reliability of any wearables for estimating energy expenditure. To quote the authors: “No studies reported intradevice reliability for heart rate or energy expenditure.”

It’s possible that a couple of intradevice reliability studies have been published in the intervening years, but we’re still a long way from establishing the generalized reliability of wearables for estimating energy expenditure and, more importantly, changes in energy expenditure.

So, the basic reason we don’t incorporate data from wearable devices into MacroFactor’s algorithms and recommendations should be obvious at this point: that data is known to be inaccurate, and its reliability is unknown. Furthermore, the magnitude and direction of the errors differ from device to device, and from user to user. We aim to generate nutrition recommendations and adjustments that will help users meet their weight gain, loss, or maintenance goals; based on the current evidence, incorporating low-quality data from wearable devices into our algorithms would run counter to that goal.

Beyond concerns regarding the accuracy and reliability of the data from wearable devices, there are a few other reasons why we don’t use estimates of energy burned during exercise to inform daily calorie targets in MacroFactor. In some other apps, you can enter the number of calories burned via exercise each day (based on either manual estimates, or estimates from a wearable device) to adjust your daily calorie target. For example, your calorie target may be 2000 calories per day, but if you tell the app you burned 500 calories via exercise, your daily calorie target will increase to 2500 calories. There are a few drawbacks to this approach.

The Drawbacks of “Eating Back” Calories Burned During Exercise

First, this sort of system has the potential to encourage people to adopt an unhelpful approach to exercise.

Exercise has a whole host of benefits that extend far beyond mere energy expenditure: building and preserving strong muscles and bones, improving mood and mental health, increasing energy levels, reducing stress, improving flexibility, etc. Furthermore, plenty of beneficial forms of exercise don’t burn that many calories – some forms of yoga, tai chi, and most approaches to resistance training immediately come to mind. Putting energy expenditure front and center has the potential to make people focus on one acute outcome of exercise (increasing energy expenditure for the day so you can eat more), which is best accomplished by one particular form of exercise (cardio, primarily), while potentially neglecting the other acute and long-term benefits that other forms of exercise (and varied forms of exercise) can give you.

More importantly, a system that allows you to “eat back” calories expended via exercise has the potential to encourage an adversarial relationship with exercise, or potentially even encourage disordered eating patterns.

For example, if you’re attempting to lose weight and you have a relatively low calorie target, it may be tempting to go for a jog simply as a means to an end: you want to be able to tell your app that you burned 500 calories exercising so that you can eat 500 more calories at dinner. Now, there’s nothing wrong with the general mindset of, “hey, if I move around more I’ll probably be able to eat more,” but this approach to exercise has the potential to turn quite toxic. You can start to view exercise, not as fun and productive way to challenge yourself, not as a way to improve and expand your physical capabilities over time, and not as a way to relieve stress and improve your mood, but as an unpleasant chore that is simply a means to an end – being able to eat a few more calories at dinner. This transactional view of trading exercise for food is commonly observed in individuals with disordered eating patterns.

In addition to the host of benefits associated with regular exercise, exercise is a major predictor of successful weight loss maintenance. If you develop an adversarial relationship with exercise when attempting to lose weight – you only do it to burn more calories or to increase your daily calorie targets, but you come to view it as an unpleasant obligation – you’ll probably be less likely to stick with it long-term, which can make weight loss maintenance considerably more challenging.

More odiously, however, framing exercise primarily as a method of burning calories might encourage binge-purge behaviors for some people. If you feel out of control over how much you’re eating, it might be tempting to try to burn off the extra calories later. At the end of the day, your nutrition tracking app may show you that your energy intake and energy expenditure were balanced – high intake offset by a ton of exercise – but these sorts of behaviors can lead down a bad road if they persist.

So, even if wearables did accurately and reliably estimate energy expenditure during exercise, we’d still need to think long and hard about whether or not the potential benefits of this feature – letting users log exercise calories, for the purpose of informing daily calorie targets – outweighed the risks. I’m not sure they do. Thus, this feature would potentially conflict with our core goal of reducing the stress associated with dieting and nutrition tracking.

However, I doubt we’ll ever need to have that discussion for one simple reason: even if wearables accurately measured how many calories you burn during exercise, MacroFactor doesn’t need that data to make appropriate calorie adjustments and recommendations for people trying to gain, lose, or maintain weight.

That may seem counterintuitive: “if MacroFactor is making nutrition recommendations, don’t I need to tell it how many calories I’m burning via exercise?”

In actuality, our system works the other way around: based on your nutrition and weight data, we figure out how many calories you’re burning. That may seem like a semantic difference, but it comes with two major advantages and only one disadvantage (which can be easily circumvented).

Advantage 1: Better accounting for energy compensation

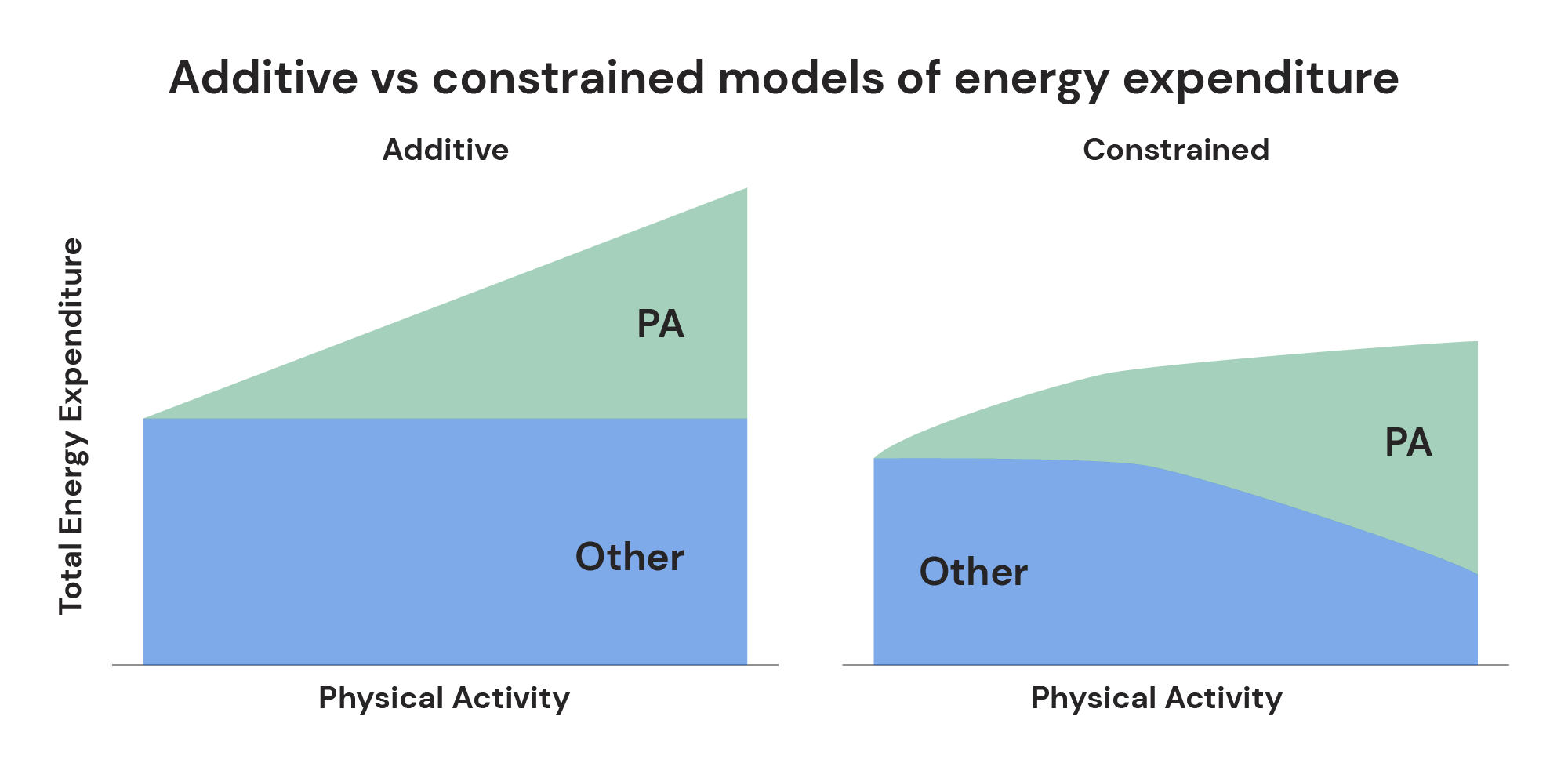

First, logging energy expenditure from exercise for the purpose of adjusting daily calorie targets is predicated on a pretty major assumption: burning more calories via exercise increases your total energy expenditure for the day in an additive fashion. On its face, that seems like a reasonable assumption: if you normally burn 2000 calories per day, and you burn an extra 500 calories via exercise, it makes sense that your total energy expenditure for the day would be 2500 calories. However, the actual relationship between activity levels and total daily energy expenditure is quite a bit more complex than that.

Welcome to the wonderful world of energy compensation.

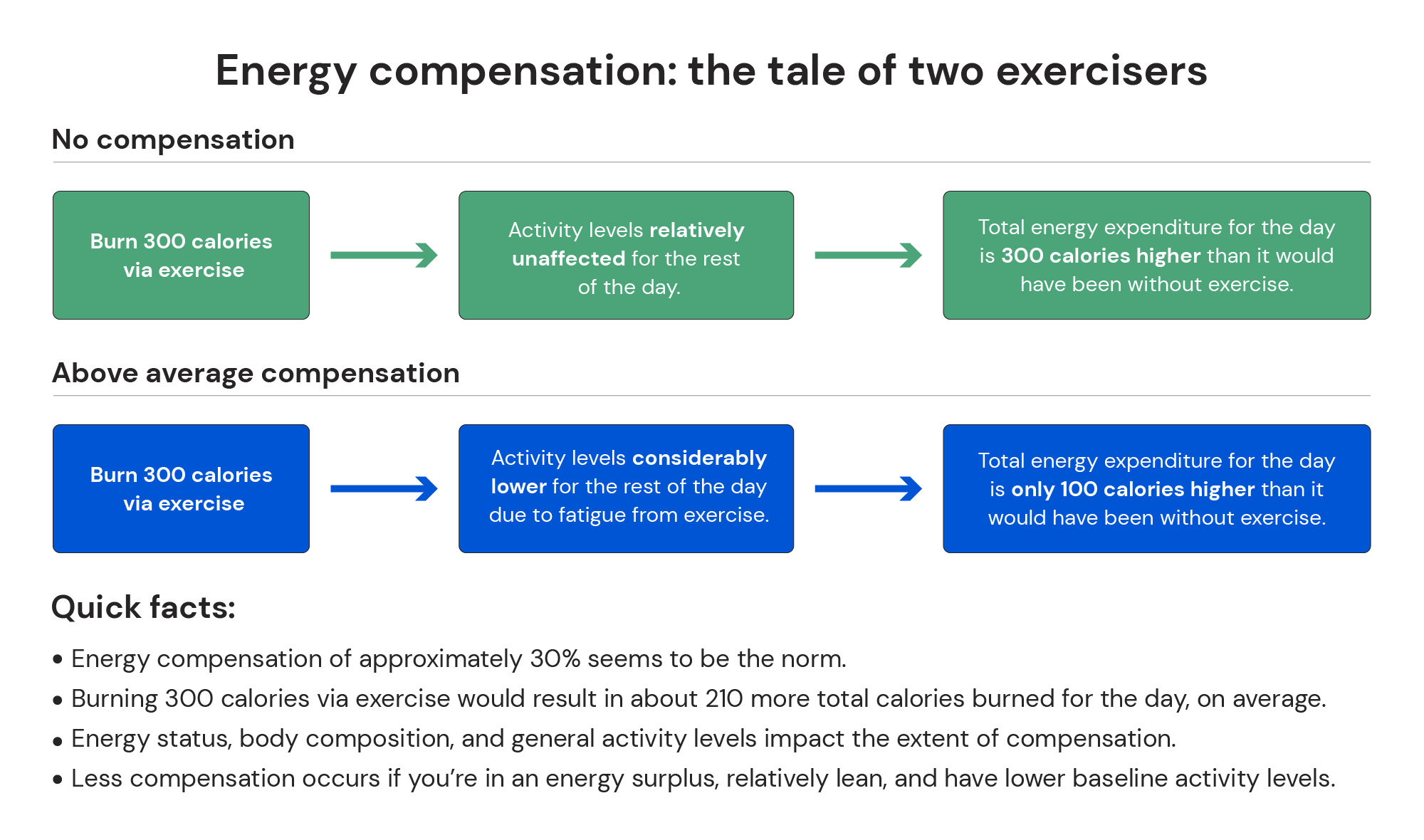

Exercise doesn’t occur in a vacuum. It displaces other activities, and it has effects on your behaviors throughout the rest of the day. If you go for a morning jog, it might put some pep in your step throughout the rest of the day, leading you to move around a bit more than you otherwise would have. Conversely, if I went for a morning jog, I’d feel tired and sluggish for the rest of the day, and therefore move around quite a bit less than I otherwise would have. So, if you burn 500 calories on your morning jog, your total energy expenditure for the day might actually increase by a total of 600 calories – more than you burned on your jog. If I went for the same jog, my total energy expenditure for the day may only increase by a total of 300 calories – less than I burned on my jog.

Research has shown that the second scenario is quite a bit more common: total daily energy expenditure only increases by about 72 calories, on average, for every 100 additional calories you expend via physical activity. Furthermore, behind that simple average, there’s quite a bit of nuance.

For example, if you’re relatively sedentary, burning 100 additional calories via physical activity will increase your total daily energy expenditure by some figure closer to 100 calories (in other words, it may increase by 90 calories, instead of 72); if you’re already very active, burning 100 additional calories via physical activity will increase your total daily energy expenditure by some amount that’s further from 100 calories (in other words, it may only increase by 50 calories). Additionally, if you’re attempting to gain weight, additional exercise has a larger impact on total daily energy expenditure; if you’re attempting to lose weight, additional exercise has a smaller impact on total daily energy expenditure. Finally, it also appears that people with higher levels of body fatness experience more energy compensation than their leaner counterparts.

So, if you burn 500 calories via exercise, how much does your total daily energy expenditure increase (and, by extension, how much should you increase your daily calorie targets to account for the energy you burned via exercise)?

Well, if you’re relatively sedentary and attempting to gain weight, a 500-calorie increase in exercise energy expenditure may actually result in a 500-calorie increase in total daily energy expenditure. If you’re already quite active and attempting to lose weight, burning an additional 500 calories via exercise may result in a mere 200 calorie increase in total daily energy expenditure. However, those would still just be rough approximations, because there’s still considerable inter-individual variability lurking beneath these trends.

In other words, if you burn 500 calories via exercise, your total energy expenditure for the day may only increase by 100 calories, or it might increase by 600 calories. An increase of ~350 calories would be my best guess based on the research, but there’s a very wide range of plausible values. So, even if you knew exactly how many calories you burned during an exercise session, that information would only be minimally informative for adjusting your daily calorie targets. In essence, we know that your daily calorie target probably shouldn’t increase by exactly 500 calories (in other words, the way that logging exercise works in other apps runs counter to the research on energy compensation). However, we don’t know what the appropriate increase should actually be for the individual.

With MacroFactor, we remove the guesswork. If you start exercising more and you start losing weight considerably faster or gaining weight considerably slower, that tells us that the additional exercise has increased your total daily energy expenditure by quite a lot. Conversely, if you start exercising more, but the change in exercise has minimal impact on your rate of weight gain or weight loss, that tells us that you’re experiencing a larger amount of energy compensation – additional exercise didn’t have a particularly large effect on your total daily energy expenditure. In short, MacroFactor can calculate the impact of exercise on total energy expenditure, and make appropriate dietary adjustments. Conversely, simply logging exercise calories to additively increase your daily calorie targets necessarily involves making an assumption (an assumption that’s typically incorrect) about the relationship between exercise energy expenditure and total energy expenditure, with no mechanism to course-correct if the baked-in assumption happens to be wrong.

Advantage 2: Accounting for logging and digestive idiosyncrasies

There’s another sneaky benefit of determining energy expenditure based on dietary data – instead of data from wearables – for the purpose of determining dietary targets: using nutrition data accounts for logging and digestive idiosyncrasies that a wearable device wouldn’t be privy to.

Let’s talk about poop.

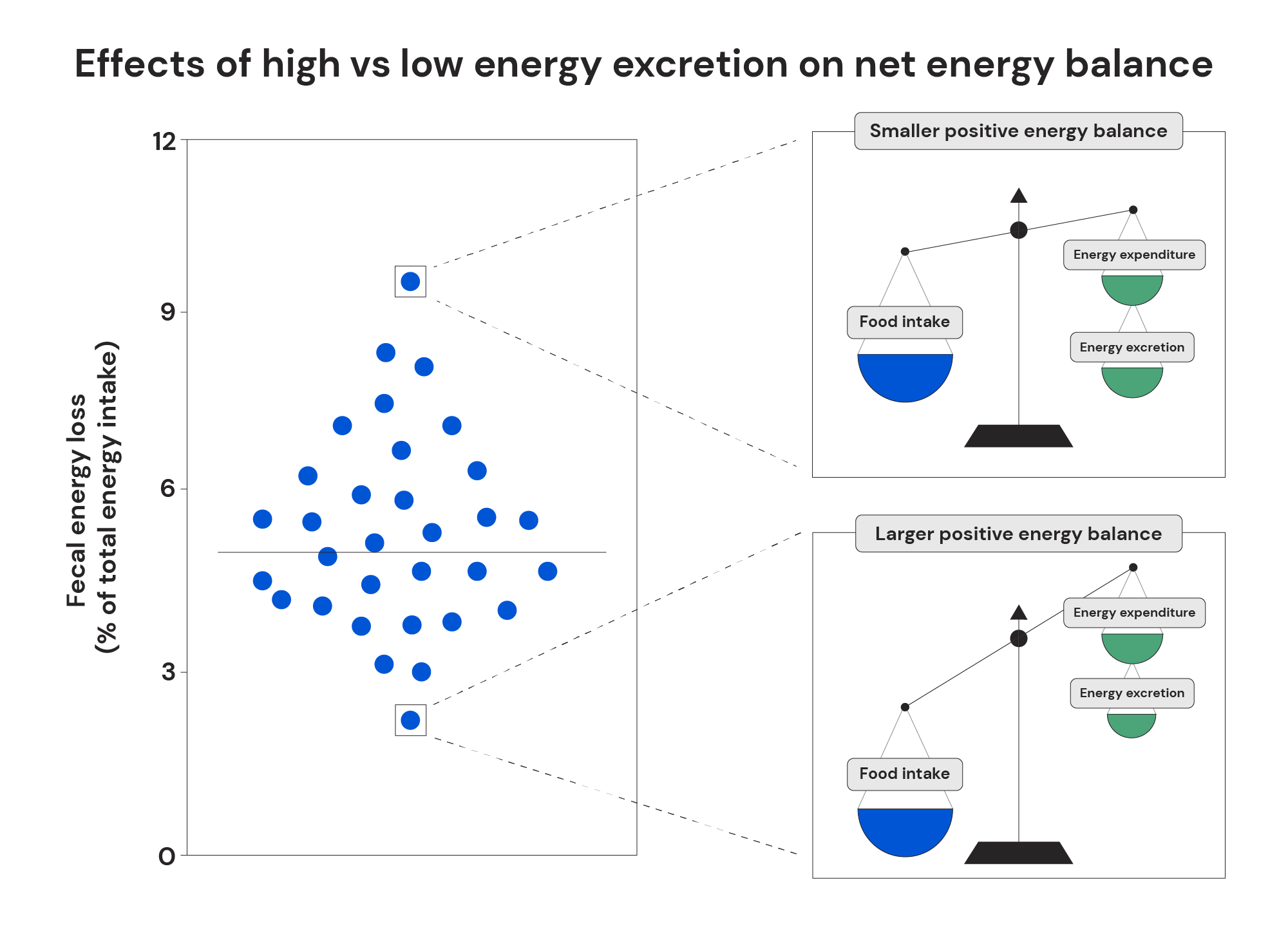

The vast majority of the energy you consume from food is absorbed in your digestive tract…but not all of it. In people without malabsorptive conditions, about 2-10% (or even up to 20%) of the energy you consume winds up being excreted in your urine and feces. The relative proportion of energy excreted likely depends on whether you’re in an energy deficit or surplus, the amount of fiber you consume, and probably a host of other yet-to-be-identified factors.

So, how many calories survive your digestive tract, only to be excreted in your feces? Unless you make a habit of burning all of your poop in a bomb calorimeter, I suspect you can’t answer that question.

However, energy excretion is a non-trivial factor when discussing energy regulation and the calories in/calories out model. If you eat 2500 calories per day, and you excrete 10% of those calories, you’re excreting 250 calories per day. Conversely, if you only excrete 2% of the calories you consume, you’re only excreting 50 calories per day. That 200-calorie difference is roughly equivalent to the energy cost of an average-sized person running two miles.

People are free to debate how to best account for fecal energy excretion in the calories in/calories out model of body weight regulation. You may prefer to lump it into the “calories out” side of the equation (it is energy leaving your body after all, albeit not in the form of heat or mechanical work). I think it’s actually preferable to simply deduct it from the “calories in” side of the equation (scroll down to footnote 1 if you care to read my reasoning).1Footnote 1: If you want to be as rigorous as possible, the energy in the food you consume doesn’t really count as “calories in” until the energetic nutrients from those foods make it across the intestinal lining. Your entire alimentary canal (your mouth, esophagus, stomach, intestines, and anus) is continuous with the outside world. In other words, you could pass a string down your throat and out of your anus without ever puncturing another tissue –– in a way, humans are like donuts. As a simple analogy, if you had a hole in your arm, and you dropped a piece of food through that hole, you wouldn’t count that piece of food as “calories in” when it entered the hole and “calories out” when it exited the hole. The same concept applies to your alimentary canal. If you treated “calories in” as all of the energy in the food you consumed, rather than the energy absorbed by your intestines, that would imply that the energy in your feces is energy you expended. However, you’re quite clearly not expending the energy in your feces – you simply didn’t absorb or utilize it in the first place. Of course, this is primarily a semantic difference most of the time. If you treat the energy in your feces as a component of “calories out,” or if you treat the energy in your feces as a deduction from “calories in,” you ultimately wind up in the same place – a wearable device used to estimate energy expenditure can’t know how much energy you’re excreting in your feces, and it can’t know the extent to which your energy intake targets should increase to account for fecal energy excretion. However, regardless of how you account for fecal energy excretion, it can have a non-trivial impact on total energy balance.

Similarly, it’s virtually impossible to perfectly track energy intake. For example, cooking meat and vegetables can impact their net metabolizable energy. Cooling cooked starches can impact their net metabolizable energy (via retrogradation of “normal” starch into resistant starch). You probably don’t weigh out every gram of grease in a pan after cooking meat or sautéing vegetables. You probably can’t perfectly estimate how much fat drips off when grilling foods. You may even prefer to just eyeball measurements for some foods you eat (you will never catch me precisely measuring spinach), or skip logging some things altogether (there’s some maltodextrin in most granulated non-nutritive sweeteners, and I doubt most people log that). Your kitchen scale may not be perfectly calibrated. Some of the foods you eat may have labeling errors (calorie and macronutrient labeling errors of up to 20% are allowed by law). You even extract more energy from foods if you blend them up first (for example, you derive more metabolizable energy from almond butter than from an equivalent amount of whole almonds).

In short, it’s impossible to perfectly track food intake. No matter how hard you try, you will always have some amount of food logging error. And, if you eat similar foods and log foods in similar ways most of the time, the food logging errors you have will tend to have a similar directionality.

So, why does all of this matter?

Well, if you’re using an estimate of energy expenditure from a wearable device to impact your daily calorie targets, there’s no way for the wearable device to “know” anything about the idiosyncrasies of your food logging habits, and there’s no way for it to “know” anything about fecal energy excretion. So, if you use data from wearables to inform your energy intake targets, there will be plenty of important information that those devices simply can’t account for.

To illustrate, let’s assume that we live in a world where wearable devices can perfectly calculate your daily energy expenditure. You’re aiming to maintain weight, and your wearable device tells you that you burned 2500 calories today.

Simple enough, right? You can aim to consume 2500 calories, and maintain energy balance.

Not so fast.

Maybe you made some fried eggs for breakfast, and you put a tablespoon of butter in the pan. You logged a tablespoon of butter for the meal, but you didn’t mop every last drop of butter from the pan and from your plate. Maybe you had some leftover rice for lunch; during the cooling process, some of the starch in the rice became resistant starch, reducing its net caloric content. At dinner, maybe you ate two bananas, and you didn’t feel like weighing them, so you just logged them as two medium bananas – however, they were both a bit smaller than a “standard” medium banana.

None of these little food logging errors are all that important, but they might mean you wound up consuming 2400 calories instead of 2500.

Now, let’s assume that 6% of the energy you consumed survived your digestive tract, and was excreted in your feces. That’s another 150 calories.

In total, you’d still wind up in a non-trivial calorie deficit: you’re burning 2500 calories per day, while deriving 2250 calories of energy from the foods you’re eating (consistent with losing about half a pound per week), in spite of doing a very good job of logging your food and in spite of knowing your true daily energy expenditure with perfect accuracy.

So, how would MacroFactor handle this exact scenario?

Based on your weight and nutrition data, MacroFactor would see that you’re logging 2500 calories per day and losing about half a pound per week. As a result, it would estimate that you’re burning 2750 calories per day, and recommend that you consume about 2750 calories per day to maintain weight.

At first blush, that may seem inappropriate. Remember, this scenario assumes that your daily energy expenditure is known to be 2500 calories per day. However, based on the idiosyncrasies of your food logging and the energy you excrete in your feces, 2750 calories per day is actually the perfect intake target for you – when you log 2750 calories, you end up consuming 2500 calories of net metabolizable energy.

When you estimate energy expenditure from weight and nutrition data, errors of any reasonable magnitude simply wash out when making nutrition recommendations.

In fact, this principle even applies if you have considerably larger (but relatively consistent) tracking errors. For example, let’s assume that you don’t care that much about perfectly logging your food.

Harkening back to the precision vs. accuracy discussion, maybe you always have a splash of cream in your morning coffee, and since you have the same splash of cream every day, you don’t bother logging it. Maybe you’re happy to simply not track foods with sufficiently low energy density (fibrous vegetables, for example). Maybe you eyeball serving sizes for cooking oils, spreads, sauces, and condiments.

We know that most people underestimate energy consumption, so let’s assume that all of these little inaccuracies add up to a 30% tracking error: you think you’re consuming 2500 calories per day, but you’re actually consuming 3250 calories per day.

That may seem like an insurmountable tracking error…but it’s actually fine, since MacroFactor’s intake targets have your tracking error “baked in,” as long as it’s reasonably consistent. Let’s once again assume that you burn 2500 calories per day, and you’re aiming to maintain your weight.

Here’s a step-by-step illustration of how this scenario would play out in MacroFactor:

- You’d aim to consume 2500 calories per day. However, due to your 30% tracking error, you’d actually be consuming 3250 calories per day.

- Since an actual intake of 3250 calories per day would result in a calorie surplus, you’d start gaining a bit of weight.

- MacroFactor would see that when you log 2500 calories per day, you gain weight. So, it would start decreasing your calorie intake targets.

- After a couple of weeks, it would settle on a calorie intake target of about 1925 calories per day. Given your 30% tracking error, aiming for 1925 calories per day would result in an actual intake of 2500 calories per day.

So, even with some pretty significant tracking errors, MacroFactor would settle on appropriate intake targets, such that your actual energy intake matches your actual energy needs. This self-correcting mechanism is pretty powerful – it allows MacroFactor to make appropriate nutrition recommendations, even if user inputs aren’t perfect.

This may seem counterintuitive at first, but if you want to know your daily energy expenditure for the purpose of generating calorie intake targets to gain, lose, or maintain weight, MacroFactor’s algorithm is actually better than a theoretical system that would be able to quantify your total daily energy expenditure with perfect accuracy. Such a system could tell you that you’re burning 2500 calories per day with perfect accuracy, but you can’t guarantee that you’re consuming 2500 calories per day with perfect accuracy, due to variance in the net metabolizable energy of foods (due to labeling errors or differences in preparation methods), digestive quirks (do you excrete 2% of your daily calorie intake, or 12%?), and good old-fashioned tracking errors.

In other words, if your actual calorie intake is 20% higher than what you log, your calorie target should be 20% lower than what it theoretically “should” be in a world where you could perfectly quantify calorie intake. If your actual calorie intake is 20% lower than what you log, your calorie target should be 20% higher than what it theoretically “should” be in a world where you could perfectly quantify calorie intake. Since MacroFactor’s inputs (the food intake that you log) contain the error inherent in how you log food and how your body processes food, its outputs (your calorie recommendations for gaining, losing, or maintaining weight) will reflect both the direction and magnitude of those errors, resulting in ideal calorie targets that incorporate and account for the quirks and idiosyncrasies of your digestive system, food logging habits, and food choices.

The disadvantage of MacroFactor’s system

There’s one disadvantage to MacroFactor’s system for generating nutrition recommendations, when compared to either a) simply using a wearable device to estimate your total energy expenditure each day or b) using a wearable device to estimate caloric expenditure during exercise in order to change your daily calorie targets.

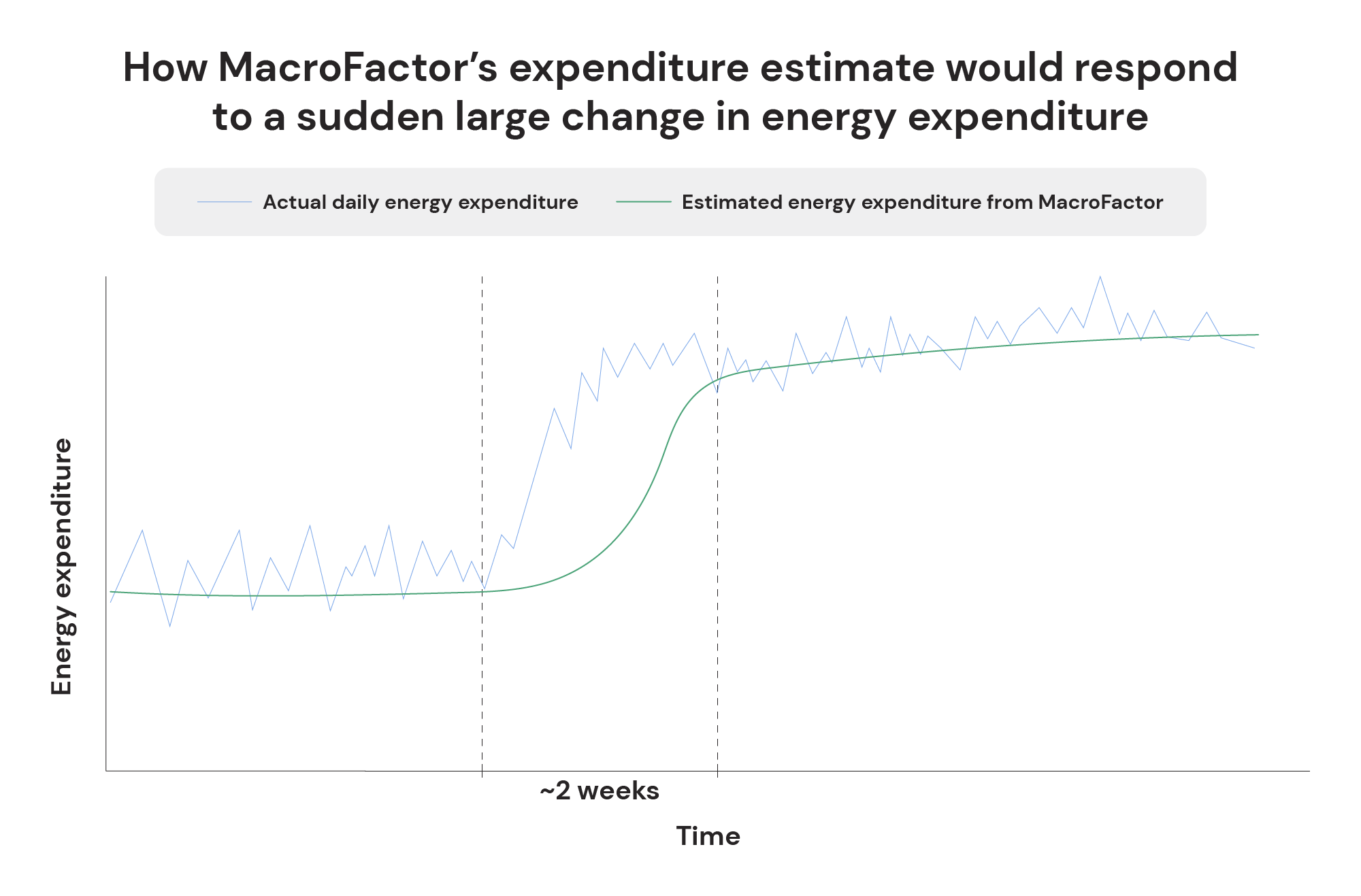

By design, MacroFactor is back-looking and follows trends, whereas wearable devices attempt to provide moment-in-time estimates of energy expenditure.

So, if you’re typically pretty sedentary, but you run a marathon tomorrow, MacroFactor won’t (by default) know to recommend a higher calorie intake to help you fuel up for your marathon, and it won’t “know” that you burned a ton of extra energy when running a marathon until after the fact. Conversely, despite all of the drawbacks of wearable devices, any wearable will be able to tell that you expended more energy on the day you ran a marathon.

The same concept applies when discussing significant lifestyle changes. If you go from being very sedentary to very active (or vice versa), a wearable device will probably pick up on that change right away – its actual estimate of your energy expenditure may not be particularly accurate or reliable, but it will be able to tell right away that your overall energy expenditure has increased or decreased considerably. MacroFactor, on the other hand, will generally take about two weeks to fully “price in” those changes.

In other words, MacroFactor is far more useful for generating nutrition targets over the medium-to-long term, but wearables are far better at picking up on fluctuations in energy expenditure over the short term.

Thankfully, there are two simple workarounds that largely negate this drawback.

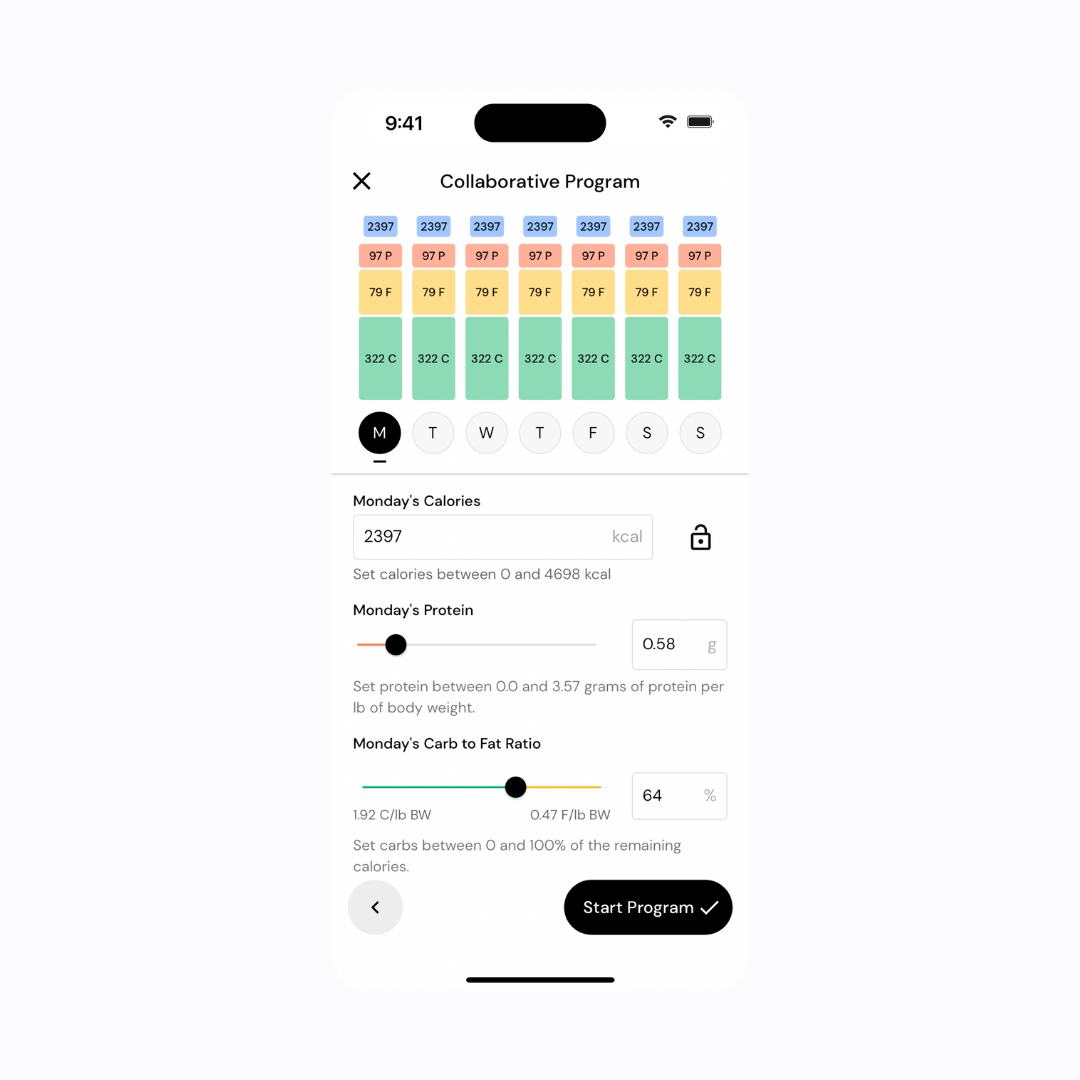

First, we give you the flexibility to account for different energy needs day-to-day. If you set up a collaborative plan in MacroFactor, you can shift your weekly calories around, in order to better align your daily calorie intake targets with your anticipated energy needs.

For example, if you have a grueling workout or go for a long run three days per week, you can budget more calories for those days, and fewer calories for rest days. Instead of aiming for 2500 calories each day (17,500 for the week), you could aim for 2800 calories on your training days, and 2275 calories on your rest days. Ultimately, your weekly calorie budget is the same, but you can align your daily targets with your daily energy needs.

Second, MacroFactor’s algorithms and adjustments don’t rely on the user to perfectly adhere to our recommendations. So, if your habitual activity levels significantly increase (maybe you’re getting back into serious exercise after an injury, or you’re starting a more physically demanding job), you could simply eat a bit more than your recommended calorie targets for a couple of weeks until the change in activity levels has been fully “priced in.”

Now, I’ll certainly admit that these aren’t perfect workarounds. In an ideal world, we’d be able to know exactly how many more calories you burn on workout days than rest days, thus allowing us to generate precise day-by-day recommendations. In that same ideal world, we’d be able to immediately know precisely how much your typical energy needs increase or decrease due to a significant change in activity levels. As it is, MacroFactor can’t do that … but neither can wearable devices (remember, wearable devices have poor accuracy and unknown precision for estimating energy expenditure).

In short, MacroFactor does a very good job of providing appropriate calorie intake targets over the medium-to-long term, and there is no perfect system for tweaking those targets in the short term. As such, we think it makes the most sense to leave short-term fine-tuning up to user discretion.

A brief recap

I realize this is a pretty long, pretty dense article, so I figure it’s worth recapping the key points to wrap things up.

- Wearable devices are known to do a poor job of estimating energy expenditure. We don’t know if those inaccurate estimates are even precise and reliable.

- Even if you could perfectly calculate energy expenditure during exercise, that would still be of limited utility due to energy compensation – increasing energy expenditure via exercise doesn’t increase total daily energy expenditure in a particularly predictable fashion.

- In addition to issues associated with energy compensation, “eating back” calories expended via exercise may also lead some people to develop an undesirable relationship with exercise.

- Even if wearable devices could accurately measure energy expenditure, they’d still be of limited utility for generating energy intake targets, since a perfect measure of energy expenditure would be unable to account for idiosyncrasies related to individualized differences in digestion, food logging habits, food preparation methods, etc.

- Since those idiosyncrasies are already factored into MacroFactor’s inputs (the food you log), they’re also accounted for in MacroFactor’s outputs (our nutrition recommendations and adjustments).

- Since no system can perfectly account for very short-term fluctuations in energy needs, we leave those adjustments up to user discretion.

Footnote 1:

If you want to be as rigorous as possible, the energy in the food you consume doesn’t really count as “calories in” until the energetic nutrients from those foods make it across the intestinal lining. Your entire alimentary canal (your mouth, esophagus, stomach, intestines, and anus) is continuous with the outside world. In other words, you could pass a string down your throat and out of your anus without ever puncturing another tissue –– in a way, humans are like donuts.

As a simple analogy, if you had a hole in your arm, and you dropped a piece of food through that hole, you wouldn’t count that piece of food as “calories in” when it entered the hole and “calories out” when it exited the hole. The same concept applies to your alimentary canal. If you treated “calories in” as all of the energy in the food you consumed, rather than the energy absorbed by your intestines, that would imply that the energy in your feces is energy you expended. However, you’re quite clearly not expending the energy in your feces – you simply didn’t absorb or utilize it in the first place.

Of course, this is primarily a semantic difference most of the time. If you treat the energy in your feces as a component of “calories out,” or if you treat the energy in your feces as a deduction from “calories in,” you ultimately wind up in the same place – a wearable device used to estimate energy expenditure can’t know how much energy you’re excreting in your feces, and it can’t know the extent to which your energy intake targets should increase to account for fecal energy excretion.